PCI Express (PCIe) lanes form the backbone of modern high‑performance systems, enabling rapid data transfer between components. Optimizing their configuration and utilization is essential for maximizing system performance, especially when running multiple GPUs, NVMe storage devices, or specialized expansion cards.

Modern motherboards distribute PCIe lanes across various slots, each supporting different bandwidth levels. Knowing how many lanes your CPU and chipset provide is crucial, as mismatched configurations can lead to bottlenecks. Advanced users may adjust BIOS settings to allocate lanes more effectively and ensure that high‑demand components receive optimal bandwidth.

Techniques include ensuring that expansion slots are used in accordance with their maximum lane capabilities and that riser cables or adapters do not degrade signal quality. Keeping up with the latest firmware updates for your motherboard can also improve PCIe link stability by enabling improved error correction and dynamic bandwidth management.

Optimizing PCIe lanes is a vital task in achieving maximum data throughput in your high‑performance PC. By understanding lane distribution and making informed adjustments, you can eliminate bottlenecks and create a balanced system that meets the demands of advanced gaming, content creation, or computational workloads.

Ultimate Guide to PCIe Lanes: Optimization, Configuration & Performance

Unlock the full potential of your high-performance system by mastering PCIe lane configuration, bandwidth allocation, signal integrity, firmware tuning, and upcoming standards.

Introduction

PCI Express (PCIe) lanes form the backbone of modern high-performance systems, delivering bi-directional serial data links between CPUs, GPUs, NVMe SSDs, network adapters, and custom accelerators. With each successive PCIe generation, raw per-lane bandwidth doubles—making optimal lane configuration critical for eliminating I/O bottlenecks.

In this comprehensive guide, we explore lane allocation, version distinctions, motherboard slot wiring, firmware optimizations, signal-integrity best practices, virtualization features like SR-IOV, and emerging standards such as Compute Express Link (CXL). By the end, you’ll know how to fully leverage every available lane for maximum throughput and reliability.

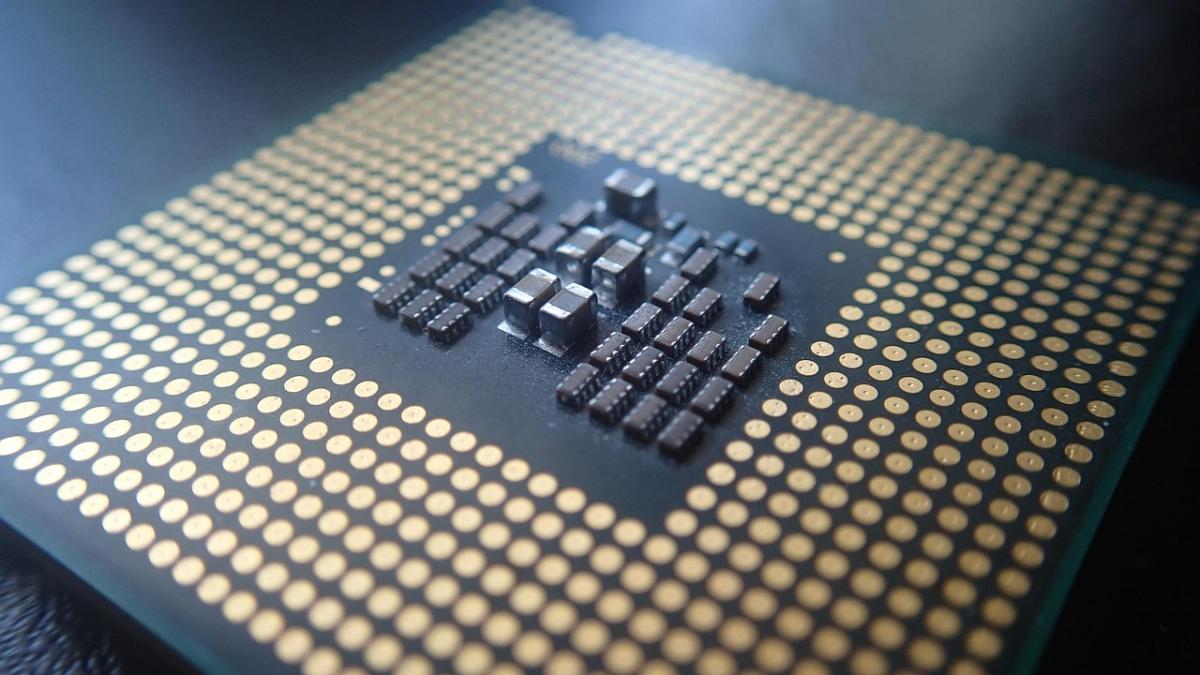

Understanding PCIe Architecture

At its core, PCIe uses point-to-point serial links arranged in lanes. Each lane consists of two differential signaling pairs—one for transmit and one for receive. Multiple lanes aggregate into links: x1, x4, x8, x16, or even x32. A x16 connection (common for GPUs) provides sixteen simultaneous differential pairs in each direction.

| Link Width | TX Pairs | RX Pairs | Typical Use |

|---|---|---|---|

| x1 | 1 | 1 | Legacy cards, low-speed devices |

| x4 | 4 | 4 | NVMe SSDs, mid-range NICs |

| x8 | 8 | 8 | High-end NICs, entry-level GPUs |

| x16 | 16 | 16 | Dedicated gaming GPUs, accelerators |

PCIe Versions & Bandwidth

PCIe has evolved rapidly since its introduction. Each new generation doubles the per-lane data rate while maintaining backward compatibility with older slots and cards.

- PCIe 1.0: 2.5 GT/s per lane (~250 MB/s)

- PCIe 2.0: 5.0 GT/s per lane (~500 MB/s)

- PCIe 3.0: 8.0 GT/s per lane (~985 MB/s)

- PCIe 4.0: 16.0 GT/s per lane (~1.97 GB/s)

- PCIe 5.0: 32.0 GT/s per lane (~3.94 GB/s)

- PCIe 6.0: 64.0 GT/s per lane (expected ~8 GB/s)

Matching the slot’s electrical wiring (Gen 3, 4, 5, etc.) with the device’s generation ensures you get the maximum negotiated link speed. A PCIe 4.0 SSD in a Gen 3 slot will auto-negotiate down to 8 GT/s, losing half its potential throughput.

Lane Allocation & Bifurcation

Motherboards distribute a finite pool of PCIe lanes provided by the CPU and chipset. High-end desktop (HEDT) CPUs can expose 44–64 lanes, while mainstream desktop CPUs often supply 16–20 lanes directly. Chipsets add another 8–24 lanes for storage, USB controllers, and onboard devices.

Bifurcation splits a wide slot into multiple narrower lanes. Common modes include:

- x16 → x8+x8: Dual GPU or GPU + NVMe adapter in one slot.

- x16 → x4+x4+x4+x4: Four NVMe drives via M.2 or U.2 adapters.

- x8 → x4+x4: Dual mid-speed devices in a single slot.

Always verify your motherboard’s bifurcation chart in the manual. Improper settings can disable slots or force all lanes at x1 speeds.

CPU vs Chipset Lanes

Differentiating CPU-direct lanes from chipset lanes is crucial:

- CPU-direct lanes connect directly to the processor’s integrated PCIe controller for ultra-low latency—ideal for GPUs, NVMe boot drives.

- Chipset lanes route through the Platform Controller Hub (PCH) over the DMI/UPI link, adding ~100ns latency. Best for secondary storage, USB, and network adapters.

Overloading the DMI/UPI link (e.g., too many Gen 4 NVMe drives on chipset lanes) can saturate the internal bus, causing higher latencies and lower throughput than CPU-direct lanes.

Expansion Slot Configuration

To ensure maximum performance:

- Populate primary GPU slot (usually slot 1 wired x16) with your fastest card.

- If installing multiple GPUs, reference your manual to use slots wired x16/x8/x8 rather than x16/x4/x4.

- For NVMe add-in cards or M.2 adapters, choose slots that bifurcate from CPU lanes whenever possible.

| Slot Label | Wiring | Recommended Device |

|---|---|---|

| PCIe_1 | x16 (CPU) | Primary GPU |

| PCIe_2 | x8 (CPU) | Secondary GPU or NVMe Adapter |

| PCIe_3 | x4 (Chipset) | 10GbE NIC, USB Controller |

Firmware & BIOS Tweaks

Modern UEFI BIOS menus offer granular PCIe settings. Key tweaks include:

- Link Speed: Manually force Gen 3, 4, or 5 for backwards compatibility in older cards.

- Link Width: Enable or disable bifurcation modes (x8/x8, x4/x4/x4/x4).

- ASPM: Active State Power Management—enables dynamic power gating but may add latency; disable for critical low-latency devices.

- PCIe Spread Spectrum: Improves EMI but can introduce jitter; toggle off for high-speed NVMe arrays.

Always update your motherboard’s firmware to the latest stable release. Vendors frequently optimize link training algorithms and error-correction routines in patch notes.

Signal Integrity & Quality

High-speed serial links at 16–64 GT/s demand pristine signal paths. Key considerations:

- Use back-panel slot positions when possible; mid-board traces can degrade signal quality.

- Avoid long riser cables or cheap adapters—choose certified PCIe Gen 4/5 risers with retimers or redrivers built in.

- Maintain proper PCB trace impedance; expect ~85 Ω differential.

- Keep slot population symmetrical (don’t leave every other slot empty) to avoid crosstalk and reflections.

- Employ retimers on motherboards that advertise “PCIe Gen 5 Ready” for error-free link training.

Dynamic Bandwidth Management & SR-IOV

Advanced platforms support dynamic lane reallocation and virtualization features:

- Dynamic Bandwidth Allocation: Some server BIOS/firmware can shift free lanes from lightly-loaded slots to congested ones on-the-fly.

- SR-IOV (Single Root I/O Virtualization): Split a single physical device (NIC, HBA) into multiple virtual functions that appear as separate PCIe endpoints to VMs.

- VT-d / AMD-Vi: Direct device assignment into guest OSes for near-bare-metal performance in virtualized environments.

Real-World Use Cases & Benchmarks

When optimized:

- NVMe RAID on x4 slots can sustain 12 GB/s sequential reads with PCIe 4.0 SSDs.

- Dual GPU rendering on x8/x8 yields within 5% of dual x16 performance in GPU-accelerated workloads.

- 10/25/100 GbE NICs achieve line rate only on CPU-direct x8 or x16 lanes—chipset lanes cap out under heavy concurrent traffic.

Troubleshooting & Diagnostics

Encounter a mismatched speed or width? Try these steps:

- Inspect “lspci -vv” (Linux) or Device Manager (Windows) to confirm negotiated Link Capabilities and Link Status.

- Swap cards between slots to isolate a faulty slot or riser cable.

- Reset CMOS and load BIOS defaults, then reapply bifurcation/link settings manually.

- Run signal integrity tests in the BIOS diagnostics or vendor-provided utilities.

Future Trends (PCIe 6.0, CXL, Unikernels)

The I/O landscape continues to evolve rapidly:

- PCIe 6.0: Doubles throughput again to 64 GT/s with PAM4 signaling—expect 8 GB/s per x1 lane and 128 GB/s in x16 links.

- Compute Express Link (CXL): Built on PCIe 5.0/6.0 physical layer, adds coherent memory access and pooling across CPUs, GPUs, and accelerators.

- Unikernels & MicroVMs: Ultra-lightweight container/VM hybrids that leverage PCIe SR-IOV for direct device pass-through.

- Optical PCIe: Emerging platforms exploring fiber for sub-meter high-bandwidth lanes without EMI constraints.

Conclusion

Optimizing PCIe lanes is a multi-faceted discipline—from selecting the right slot and bifurcation mode, updating firmware, ensuring signal integrity, to leveraging virtualization features. Every tweak can unlock additional gigabytes per second of throughput, reduce latency, and improve system balance.

Keep abreast of BIOS updates, consult your motherboard’s wiring chart, and plan lane distribution around CPU-direct vs chipset lanes. With PCIe 6.0 and CXL on the horizon, mastering these fundamentals today ensures you’re ready for tomorrow’s ultra-high-speed computing platforms.