As deep learning continues to advance, integrating AI workloads into your high performance PC becomes crucial for researchers and developers. A system optimized for deep learning requires careful hardware selection and software configuration to accelerate model training and inference.

Invest in high‑performance GPUs with ample VRAM, paired with multi‑core CPUs to handle data preprocessing. Configure your system with fast NVMe storage to reduce data bottlenecks. Install the latest drivers and deep learning frameworks, such as TensorFlow or PyTorch, to leverage hardware acceleration. Adjust thermal systems to manage the increased workload, and optimize power settings to maintain consistent performance.

By tailoring your PC to handle deep learning tasks, you can dramatically shorten training times and efficiently manage large datasets. With the right hardware, software, and thermal management, your system will be well‑equipped for cutting‑edge AI research.

Ultimate Guide to Building a High-Performance Deep Learning PC for AI Workloads

Leverage the best hardware and software configurations to accelerate your AI research, shorten training times, and achieve peak performance.

Introduction

As deep learning continues to revolutionize industries—from autonomous driving to medical imaging—researchers and developers need workstations capable of handling demanding AI workloads. A high-performance PC optimized for deep learning not only accelerates model training and inference but also ensures you can experiment rapidly with cutting-edge architectures.

In this guide, we’ll dive deep into hardware selection, software setup, thermal and power management, benchmarking, and best practices. You’ll learn how to assemble a deep learning PC that maximizes throughput, minimizes bottlenecks, and stays cool under heavy loads.

The Importance of Hardware in Deep Learning

Why Hardware Matters

Deep neural networks grind through billions of matrix multiplications. Without the right hardware, data pipelines choke, GPUs idle, and training times stretch from hours to days. Optimizing your PC for AI workloads is more than fast parts—it’s about balancing compute, memory, storage, cooling, and power for consistent throughput.

Core Components at a Glance

- High-performance GPU: The heart of model training and inference.

- Multi-core CPU: Feeds data, handles augmentation, and runs pre- and post-processing pipelines.

- NVMe Storage: Eliminates data bottlenecks for massive datasets.

- System RAM: Keeps large tensors in memory during preprocessing.

- Thermal Management: Sustains peak clock speeds without throttling.

- Power Supply: Delivers stable, high-current rails to GPUs and CPU.

Choosing the Right GPU

Consumer vs Professional GPUs

Consumer-grade cards (e.g., NVIDIA RTX series) offer excellent price/performance, while professional GPUs (e.g., NVIDIA A100, H100) deliver larger VRAM, ECC memory, and NVLink support. Your choice hinges on dataset size, budget, and multi-GPU scaling needs.

Key GPU Specs to Compare

| GPU Model | VRAM | Tensor TFLOPS | Memory Bandwidth | Price Range |

|---|---|---|---|---|

| NVIDIA RTX 4090 | 24 GB GDDR6X | 142 | 1,008 GB/s | $1,600–2,000 |

| NVIDIA A100 (40 GB) | 40 GB HBM2e | 312 | 1,555 GB/s | $10,000+ |

| NVIDIA RTX 3090 | 24 GB GDDR6X | 35 | 936 GB/s | $1,500–1,800 |

Multi-GPU and Connectivity

If your research demands multi-GPU scaling, look for motherboards with sufficient PCIe lanes and spacing. NVLink bridges deliver low-latency peer-to-peer communication, crucial for model parallelism and large-batch training.

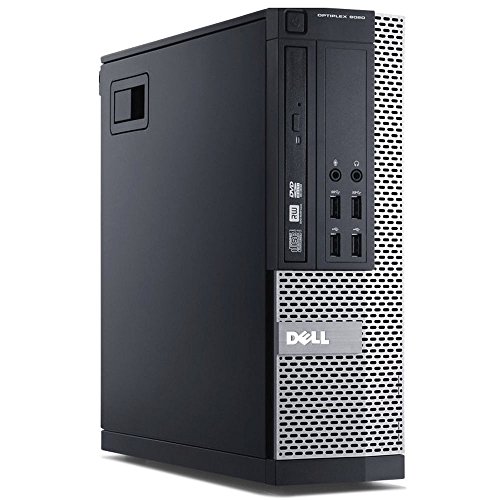

CPU and Motherboard Compatibility

Selecting a CPU

- Core Count & Threads: 16–64 cores ensure swift data preprocessing and parallel I/O.

- Clock Speed: Single-thread bursts (data augmentation scripts) benefit from high GHz.

- Instruction Sets: AVX2, AVX-512 unlock faster tensor operations on CPU side.

Motherboard Essentials

- PCIe 4.0/5.0 Support: Future-proof bandwidth for next-gen GPUs and NVMe drives.

- Robust VRM Design: Stable CPU power under long epochs.

- Ample M.2 Slots: Install multiple NVMe SSDs for dataset staging and caching.

- Memory Channels: 4- or 8-channel ECC memory for reliability and throughput.

Memory and Storage Considerations

System RAM

For large datasets and preprocessing pipelines, aim for 64–256 GB of DDR4/DDR5 RAM. ECC memory adds reliability when you can’t afford silent errors.

NVMe SSD vs. SATA SSD vs. HDD

- NVMe SSD: 3,000–7,000 MB/s read/write—ideal for loading millions of images or large TFRecords.

- SATA SSD: 500–600 MB/s—good for operating system and frequently used code libraries.

- HDD: 100–200 MB/s—use for cold storage of archives and backups.

RAID and Caching

Software RAID-0 stripes multiple NVMe drives to boost sequential throughput, but consider RAID-1 or ZFS for redundancy if data integrity is critical.

Thermal Management

Case & Airflow

- Full-tower cases with mesh front panels maximize intake airflow.

- High static-pressure fans on radiators push air through dense fin arrays.

- Positive pressure setups reduce dust accumulation inside the chassis.

Air Cooling vs Liquid Cooling

Large air coolers can handle high TDP CPUs, but AIO liquid loops or custom water-cooling deliver lower temperatures and quieter operation under sustained GPU loads.

GPU Cooling Options

- Aftermarket air shrouds with dual- or triple-fan designs.

- Hybrid blower-style water blocks for AIO integration.

- Full-cover water blocks in custom loops for multiple GPUs.

Power Supply and Stability

- Wattage Headroom: Sum total GPU and CPU draw, then add 25–30% buffer.

- 80 Plus Gold/Platinum: Ensures efficiency under high loads and reduces heat.

- Modular Cabling: Cleaner builds improve airflow and maintenance.

- Multiple 12 V Rails vs Single-Rail: Single-rail simplifies GPU power delivery.

Software Stack Optimization

Operating System: Linux vs Windows

Linux distributions (Ubuntu, CentOS) dominate research clusters with better driver support, package management, and scriptability. Windows is fine for small-scale development or when specific tools require it.

GPU Drivers & CUDA Toolkit

- Install NVIDIA’s latest driver that matches your CUDA version.

- Leverage CUDA Toolkit for custom kernel compilation and cuDNN acceleration.

- Use

nvidia-smiandnvtopto monitor GPU utilization, memory usage, and thermals.

Deep Learning Frameworks

- TensorFlow: Offers strong support for distributed training and TensorRT integration for inference optimization.

- PyTorch: Favored for its dynamic graph, ease of debugging, and thriving community tools like TorchServe.

- Containerization: Docker images (NVIDIA NGC, TensorFlow Official) guarantee reproducible environments.

Environment & Package Management

Use Conda or virtualenv to isolate project dependencies. Pin package versions to avoid “it works on my machine” scenarios and speed up container builds.

Networking and Remote Access

Remote Development

- SSH Tunnels: Securely forward Jupyter or VSCode remote sessions.

- JupyterLab & JupyterHub: Browser-based interactive notebooks on your deep learning PC.

- Port Forwarding: Expose REST inference endpoints to your local workstation.

High-Speed Interconnects

For multi-node clusters, consider 10 GbE or InfiniBand NICs to accelerate distributed gradient all-reduce operations.

Benchmarking and Profiling

Benchmark Tools

- TensorBoard: Visualize training throughput, GPU memory usage, and learning curves.

- NVIDIA Nsight Systems & Compute: Profile kernel launches, memory transfers, and PCIe traffic.

- PyTorch Profiler: Pinpoint CPU bottlenecks in data loading and preprocessing.

Key Metrics to Track

- Throughput (samples/sec)

- GPU Utilization (%)

- Memory Utilization (GB)

- PCIe Bandwidth (GB/s)

- CPU Load and I/O Wait (%)

Tips and Best Practices for Deep Learning Optimization

- Mixed-Precision Training: Use FP16 or BFLOAT16 to leverage Tensor Cores and reduce memory footprint.

- Gradient Accumulation: Simulate larger batch sizes on limited-VRAM GPUs.

- Data Pipeline Parallelism: Overlap CPU preprocessing and GPU training with asynchronous data loaders.

- Checkpointing & Resume: Save intermediate weights frequently to guard against power loss or crashes.

- Automated Hyperparameter Search: Use tools like Optuna or Ray Tune to explore learning rates, batch sizes, and optimizers efficiently.

- Version Control for Data and Code: DVC or Git LFS track large datasets alongside model code for reproducibility.

Conclusion

Building a deep learning PC tailored for AI workloads is an investment in your research velocity. By selecting the right GPUs, pairing them with multi-core CPUs, fast NVMe storage, and robust cooling and power solutions, you eliminate common bottlenecks that slow down experimentation.

On the software side, keep your drivers, CUDA toolkit, and frameworks like TensorFlow or PyTorch up to date. Monitor performance with profiling tools, leverage mixed-precision training, and maintain reproducible environments to ensure every epoch counts.

With this end-to-end optimization—from hardware acceleration and thermal management to software configuration and best practices—you’ll slash training times, handle massive datasets effortlessly, and push the boundaries of AI research.